AIAgent Admin Portal

Copilot and AI Agent Solutions with Azure OpenAI/ChatGPT

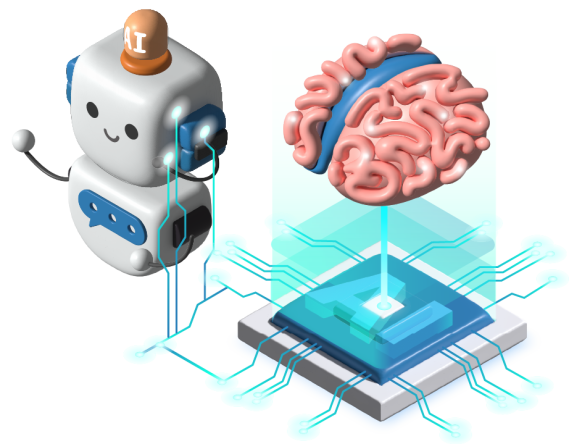

INTUMIT’s AIAgent Admin Portal integrates LLMs and Copilot features to create cutting-edge artificial intelligence products based on NLU/NLP.

Utilizing Azure OpenAI/ChatGPT models, our customers can leverage the latest Copilot and NLP technology to automatically generate training data, detect intents, and generate initial commands to streamline the conversation building process, reducing time and manpower requirements.

AIAgent Admin Portal uses less training data and provides higher accuracy as we aim to deliver smarter, advanced generative AI and Copilot services that support multiple languages, enabling multinational brands/companies to create AI Agent solutions at lower costs. Our Agentic AI, featuring multi-model AI agents, provides customized solutions for every client.

In order to improve customer and employee experiences, Intumit prioritizes enhancing the predictiveness, applicability, and versatility for its Copilot and AI Agent features during development, and fine-tune them to provide better customer experience.

Intumit AIAgent Admin Portal has the ability to create a personalized Copilot and AI Agent feature that fits best for enterprise needs. Such examples can be seen below, such as contract comparison, meeting note summary, fraud/scam detection, and more.

Semantic Search, Automatic Documentation, Voice Interaction, and multilingual support are the most used modules.

Transform static FAQs into a specialized structure using key domain terms with machine learning.

The user poses multiple questions in a single inquiry, and the AI Agent can retrieve multiple matching answers from the FAQ knowledge base. It then utilizes LLM to blend and generate a response that meets the customer’s expectations, effortlessly handling various challenging and complex queries.

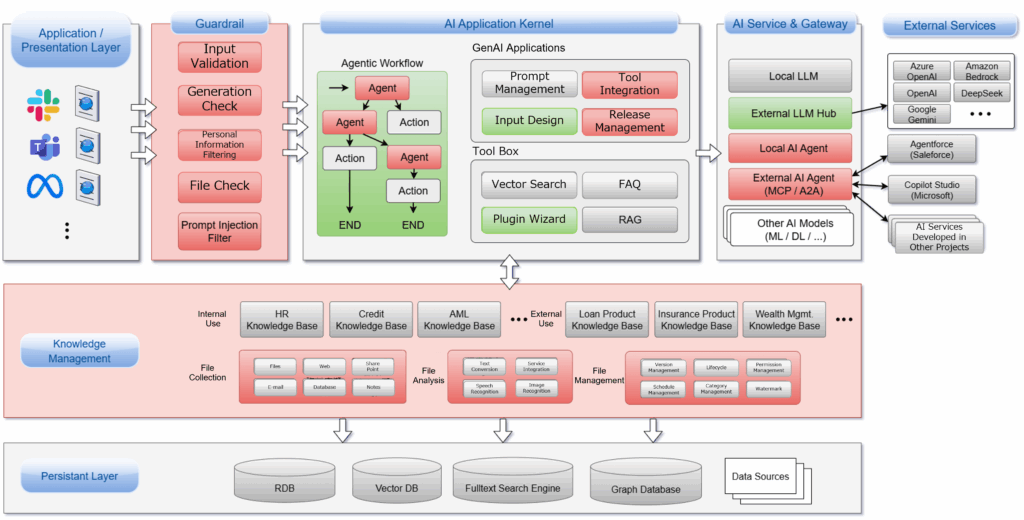

Using Azure OpenAI integrated large language model (LLM) to generate answers by processing uploaded documents and user queries. It helps users answer questions from unstructured text documents without the need to create individual FAQs and train them.

Using Azure OpenAI integrated large language model (LLM), users can generate reference answer content by providing only the question, with settings for tone, role, and direction of the response.

Upload STT, LINE CALL voice files, or other voice system files to the platform for voice content parsing, and generate key summaries or Q&A based on the parsed content.

Optimize answer content for tone, different language variations, response methods, and more.

Build AI Agents quickly with fewer training resources

Integrate traditional flyers, reports, registrations, and email notifications into a single channel

Execute extensive and complex tasks through AI-powered conversations

Handle multiple languages without the need for translation personnel

Learn complex structural logic like a human

Design marketing and shopping guide processes

Shorten service development time

Reduce expenditure costs

Obtain more precise answers

Agentic AI (AI Agents) for tailored service and tasks

AIAgent Admin Portal – Azure AI/ChatGPT Conversational Service